Table of Contents

Introduction: Why This Shift Feels Familiar — Yet Fundamentally Different

From my experience of spending over two decades in the IT industry, one pattern has always repeated itself. A technology arrives with noise, hype, and oversized promises. Enterprises rush in, tools multiply, costs rise, and then—slowly—reality sets in. Some technologies fade away, others mature quietly and become invisible infrastructure.

This reminds me of the early days of virtualization, the first cloud migrations, and even the mobile-first wave. But what I am witnessing today with AI feels different—not louder, but deeper.

What most articles miss is that the real transformation in AI is no longer happening inside massive data centers or cloud dashboards. It is happening quietly, at the edges of the physical world—inside machines, factories, vehicles, hospitals, cameras, and everyday devices.

This is the rise of Physical AI.

Not as a buzzword, but as a natural evolution of how intelligence moves closer to where action actually happens.

Why the Industry Is Moving Beyond Bigger Models

For the last few years, progress in AI was measured by size. Larger models. More parameters. Bigger training datasets. Higher compute budgets.

And for a while, this worked.

But from my experience, every “bigger is better” phase in technology eventually runs into friction. Mainframes gave way to distributed systems. Monoliths broke into microservices. Centralized storage evolved into edge caching.

AI is now hitting a similar inflection point.

The industry is slowly realizing that intelligence does not always need to be centralized. In many real-world scenarios, sending data to the cloud, waiting for inference, and then acting is simply too slow, too expensive, or too risky.

In Simple Words

Bigger AI models are powerful, but power alone is not enough when decisions need to happen instantly and locally.

When Cloud-Centric AI Started Showing Cracks

This reminds me of early cloud optimism, when everything was expected to move to centralized platforms. Over time, reality forced a more balanced architecture.

Cloud-based AI systems face challenges that become obvious only at scale:

- Latency becomes unacceptable for real-time decisions

- Connectivity cannot be guaranteed everywhere

- Data transfer costs quietly explode

- Privacy and compliance risks increase

- Reliability suffers in mission-critical environments

From my experience, these limitations do not show up in demos. They show up in production, at three in the morning, when systems fail and humans still need to intervene.

Physical AI emerges not as a rejection of the cloud, but as a correction.

The Subtle but Powerful Shift Toward the Edge

Edge computing was the first signal that intelligence needed to move closer to data sources. Physical AI takes this one step further.

It is not just about processing data locally. It is about understanding and acting within the physical environment itself.

Sensors, cameras, motors, robotic arms, vehicles, drones—these are no longer dumb endpoints. They are becoming intelligent participants.

What most articles miss is that this shift is architectural, not cosmetic. It requires rethinking how systems are designed, monitored, and trusted.

Physical AI: Intelligence That Touches the Real World

Physical AI refers to AI systems that:

- Perceive the physical environment

- Interpret real-world signals

- Make autonomous or semi-autonomous decisions

- Act through physical mechanisms

Unlike purely digital AI, mistakes here are not just wrong answers. They can damage equipment, disrupt operations, or endanger lives.

In Simple Words

Physical AI is AI that doesn’t just think or predict—it acts in the real world.

From Prediction to Action: Why Physical AI Changes Everything

In traditional enterprise AI, insights were often consumed by humans. Dashboards informed decisions. Reports guided strategy.

Physical AI closes the loop.

It senses, decides, and acts—sometimes without waiting for human approval.

From my experience, this transition from “decision support” to “decision execution” is the most profound shift enterprises struggle with.

Trust models change. Accountability changes. Even organizational structures change.

Where Physical AI Is Already Winning Quietly

Physical AI is not futuristic. It is already embedded in critical systems:

- Smart manufacturing lines optimizing throughput in real time

- Autonomous warehouse robots coordinating movement

- Medical devices assisting in diagnostics and intervention

- Energy grids balancing load dynamically

- Intelligent traffic systems adapting to real conditions

What most articles miss is that many of these systems do not advertise themselves as “AI-first.” They simply work.

The Hidden Architecture Behind Physical AI Systems

Physical AI systems rely on layered intelligence:

- Perception layers (sensors, vision, signal processing)

- Local inference engines

- Rule-based safety constraints

- Cloud-based learning and updates

- Human oversight mechanisms

From My Experience

The most successful systems are not the most autonomous—they are the most balanced. Human override, explainability, and fail-safe mechanisms matter more than raw intelligence.

India’s Quiet Advantage in the Physical AI Era

From my experience working across Indian IT and enterprise ecosystems, India holds an underrated advantage.

We combine:

- Strong engineering talent

- Cost-efficient hardware integration

- Manufacturing scale

- Growing startup ecosystems

- Deep exposure to real-world constraints

Physical AI rewards practical problem-solving over theoretical perfection. That plays to India’s strengths.

The Role of AI Agents in Physical Systems

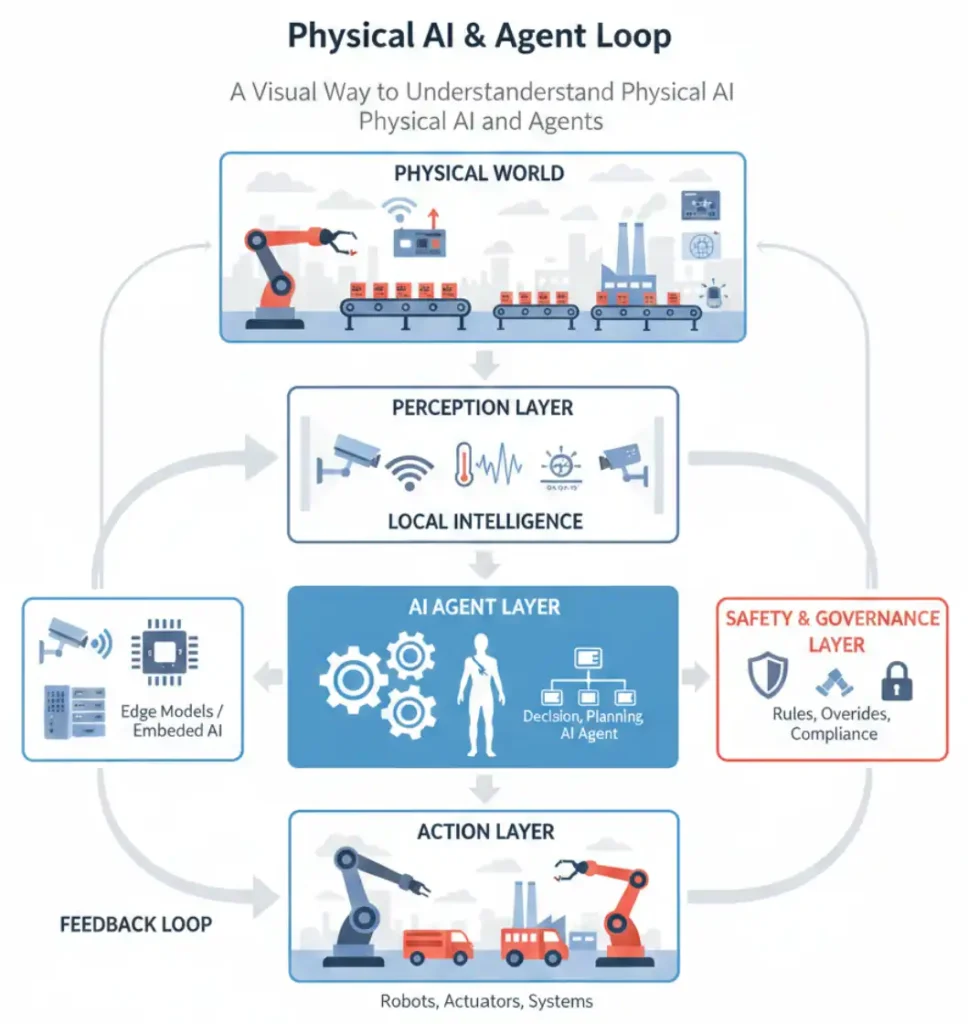

A Visual Way to Understand Physical AI and Agents

Image credit: generated by Google Gemini

Diagram Concept: Physical AI & Agent Loop

- Physical World (Machines, Sensors, Environment)

↓ - Perception Layer (Cameras, IoT Sensors, Signals)

↓ - Local Intelligence (Edge Models / Embedded AI)

↓ - AI Agent Layer (Decision, Planning, Coordination)

↓ - Safety & Governance Layer (Rules, Overrides, Compliance)

↓ - Action Layer (Robots, Actuators, Systems)

↓ - Feedback Loop back to Physical World

Understanding the Physical AI & Agent Loop

The AI Agent sits at the center, not as the smartest component, but as the most responsible one. It balances speed with safety, autonomy with control.

This diagram represents a continuous cycle where machines interact with the real world using intelligence. Here is how the loop functions, step-by-step:

Step 1: The Physical World (The Origin)

Everything starts in the Physical World. This includes factory floors, smart cities, or autonomous vehicles. It consists of the actual hardware (machines) and the environment they operate in.

Step 2: Perception Layer (Gathering Data)

The system “sees” and “feels” the world through the Perception Layer.

- Cameras: Provide visual data.

- IoT Sensors: Measure temperature, pressure, or motion.

- Signals: Capture sound or radio frequencies. This layer converts raw physical reality into digital data.

Step 3: Local Intelligence (Processing at the Edge)

Before the data goes to a massive cloud server, it often hits Local Intelligence (Edge Models). These are small, fast AI models embedded directly into the hardware. They filter noise and handle immediate tasks that require zero lag, like detecting a person standing in front of a moving robot.

Step 4: AI Agent Layer (The “Brain”)

This is the core of the system. The AI Agent doesn’t just process data; it makes decisions.

- Planning: It figures out the best sequence of events.

- Coordination: It manages multiple tasks simultaneously.

- Reasoning: It determines why an action should be taken based on its current goals.

Step 5: Safety & Governance (The Guardrails)

Before an AI decision becomes a physical movement, it must pass through Safety & Governance. This layer checks the plan against pre-defined rules, compliance standards, and safety overrides. If a plan is deemed “unsafe,” this layer blocks the action to prevent accidents.

Step 6: Action Layer (The Result)

Once cleared, the command moves to the Action Layer. This is where the digital becomes physical again. Robots move their arms, actuators turn valves, and automated systems execute the decision made by the AI Agent.

Step 7: Feedback Loop (The Learning Cycle)

The action taken in Step 6 changes the Physical World. These changes are immediately picked up by the sensors in Step 2, creating a Feedback Loop. This allows the AI to learn from the results of its actions and adjust its next move in real-time.

AI agents act as coordinators between perception, decision-making, and action.

In physical environments, agents manage:

- Task prioritization

- Conflict resolution

- Resource optimization

- Safety constraints

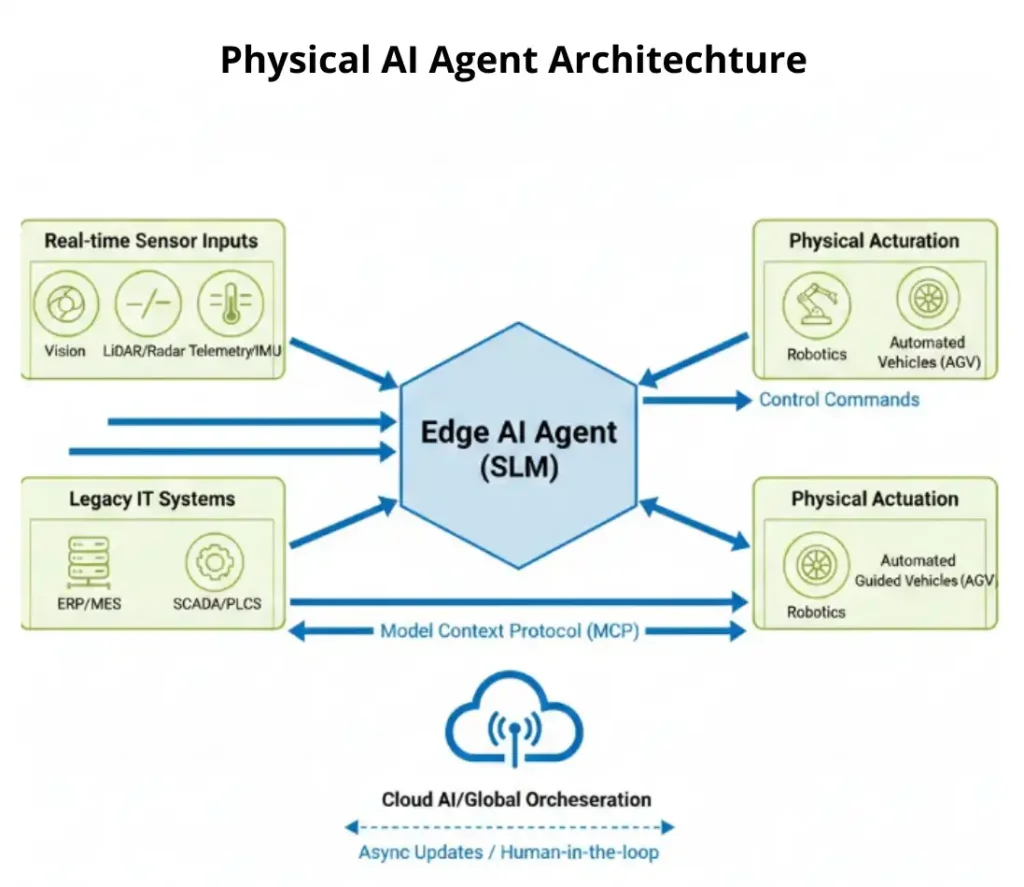

Inside the Blueprint: The 2026 Physical AI Agent Architecture

To understand how we move from a “chatbot” to a “worker,” we have to look under the hood. What most articles miss is that Physical AI requires a circular, real-time feedback loop rather than a linear request-response path. This diagram represents the standard enterprise architecture I am seeing adopted across the industry in and around these times.

A Step-by-Step Breakdown of the Workflow:

- The Ingestion Phase (Real-Time Sensor Inputs): Unlike a web app that waits for a user to type, Physical AI is “always on.” It ingests high-frequency data from LiDAR, computer vision, and IMU (Inertial Measurement Unit) sensors. This is the AI’s “nervous system.”

- The Contextual Bridge (Legacy IT Systems via MCP): From my experience, the biggest hurdle in IT has always been the “silo.” Here, the Model Context Protocol (MCP) acts as a universal translator. It allows the AI to check the ERP (Enterprise Resource Planning) or SCADA systems to ensure that the physical action it’s about to take aligns with business logic (e.g., “Is this part actually in stock?”).

- The Brain (Edge AI Agent / SLM): The processing doesn’t happen in a distant cloud. It happens on an SLM (Small Language Model) located on-site. This is where “Inference Scaling” occurs—the model “thinks” through various physical simulations before committing to a movement.

- The Execution (Physical Actuation): Once the decision is made, the agent sends high-speed control commands to the hardware—whether it’s a robotic arm, an Automated Guided Vehicle (AGV), or an industrial drone.

- The Safety Valve (Cloud Orchestration & Human-in-the-loop): The Cloud is relegated to a “supervisor” role. It handles asynchronous updates (learning from the day’s work) and provides a portal for human intervention. This ensures that if the AI encounters a scenario it doesn’t recognize, it can “phone home” without stopping the entire production line.

From My Experience

In every large system I have seen succeed, intelligence alone was never enough. Coordination, guardrails, and accountability mattered more than brilliance.

AI agents act as coordinators between perception, decision-making, and action.

In physical environments, agents manage:

- Task prioritization

- Conflict resolution

- Resource optimization

- Safety constraints

In Simple Words

AI agents are the “conductors” that help physical AI systems work together without chaos.

Trust, Safety, and Responsibility in Physical AI

Physical AI forces uncomfortable but necessary conversations:

- Who is responsible when systems fail?

- How much autonomy is acceptable?

- How do we audit decisions made in milliseconds?

From my experience, organizations that delay these questions pay for it later.

What the Next Five Years Likely Look Like

This reminds me of the period after cloud adoption stabilized. The hype faded, and what remained was architecture discipline.

Over the next five years, Physical AI will not explode—it will embed.

Expect:

- Smaller, specialized models replacing giant general-purpose ones at the edge

- Hybrid architectures where cloud trains and edge executes

- AI agents becoming standard system components, not optional add-ons

- Stronger regulatory and safety frameworks

- Clear separation between experimental AI and production-grade Physical AI

From my experience, the winners will not be companies with the smartest models, but those with the safest and most reliable systems.

Why Physical AI Is a Leadership Problem, Not a Technology Problem

What most articles miss is that Physical AI adoption fails more due to leadership gaps than technical ones.

When intelligence starts making decisions in the physical world:

- Risk ownership becomes unclear

- Accountability shifts from teams to systems

- Traditional KPIs stop working

From my experience, organizations that succeed treat Physical AI as an operating model change, not an IT upgrade.

How Physical AI Changes the Role of Humans

This is not a story of replacement.It is a story of repositioning.

Humans move from:

- Manual control → Supervision

- Repetitive tasks → Exception handling

- Reactive decisions → System design

In Simple Words

Physical AI handles speed and scale. Humans handle judgment and responsibility.

The SEO Reality: Why Physical AI Will Outrank Generic AI Content

From my experience writing and observing high-performing content, Google now rewards:

- Experience-based narratives

- Clear opinions backed by practice

- Original framing instead of recycled definitions

Physical AI sits at the intersection of AI, hardware, safety, and operations—making it naturally authoritative when written well.

Frequently Asked Questions

What is Physical AI in simple terms?

Physical AI is AI that interacts with the real world through machines and devices. From my experience, it matters because decisions happen instantly where data is generated, not after a round trip to the cloud.

How is Physical AI different from edge AI?

Edge AI focuses on local inference. Physical AI goes further by combining perception, decision-making, AI agents, and physical action in a closed loop.

Are AI agents mandatory for Physical AI?

In complex systems, yes. AI agents provide coordination, safety checks, and prioritization. Without them, systems become fragile and unpredictable.

Is Physical AI safe for critical industries?

It can be, if designed responsibly. From my experience, safety layers, human override, and audits matter more than model accuracy.

Will Physical AI replace human workers?

It will change roles rather than remove them. Humans shift toward oversight, design, and accountability.

Final Thoughts: Why This Shift Feels Inevitable

After watching multiple technology cycles rise and fall, Physical AI stands out because it aligns intelligence with reality.

- It does not chase scale for the sake of it.

- It does not worship models.

- It respects constraints.

- It does not promise magic. It promises execution.

- And in the real world, that makes all the difference.

From my experience, technologies that respect constraints are the ones that last.

Physical AI is not the future of AI.

It is AI finally growing up.

If you are an IT leader, architect, or technologist, now is the time to look beyond dashboards and demos.

Start asking:

- Where should intelligence actually live?

- What decisions must remain human?

- What failures are unacceptable?

Those answers—not models—will define your success in the Physical AI era.

This reminds me of the post-cloud stabilization phase. Not explosive growth, but intelligent consolidation.

Expect:

- Smaller, specialized models

- Hybrid cloud-edge architectures

- Stronger regulatory oversight

- More human-in-the-loop systems

Physical AI will not replace humans. It will reshape how humans work with machines.

Frequently Asked Questions

What is Physical AI in practical terms?

Physical AI refers to AI systems that sense, decide, and act in the real world using physical devices. From my experience, it is less about theory and more about reliability, safety, and real-time execution.

Is Physical AI replacing cloud-based AI?

No. What most enterprises discover is that physical AI complements the cloud. Cloud systems handle learning and coordination, while physical AI handles immediate, local decisions.

Why is Physical AI important now?

From my experience, latency, cost, and reliability limits of centralized AI are forcing intelligence closer to where action happens. The technology finally caught up with the need.

Is Physical AI risky?

Yes, if designed poorly. Physical AI systems must include safety layers, human oversight, and fail-safes. What most articles miss is that risk comes from over-automation, not intelligence itself.

Will Physical AI impact jobs?

It will change roles more than eliminate them. From my experience, humans move toward supervision, exception handling, and system design rather than manual control.

To know more about “Role of Agents in Artificial Intellegence” read my blog post link below

Agents in Artificial Intellegence

Credits for the above info source